Introduction

Managing network traffic in an Kubernetes based platform can quickly become a challenge in terms on manageability. This Blog post documents an approach based on a discussion with Thomas Hikade how to implement a customer requirement keeping the environment manageable.

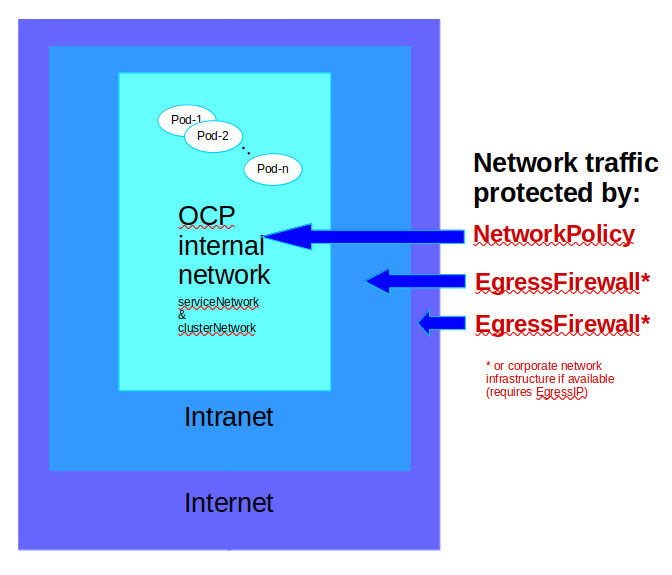

The idea discussed can be summarized as following:

- To manage access to services within the OCP cluster we use

NetworkPolicyrules - To manage access to the intranet services (which are outside OCP) we use the

EgressFirewalland matchingNetworkPolicyconfigurations. In this document we use the network 135.xxx.xxx.xxx/8 as the intranet network. - To manage access to the internet services we setup

EgressIPso that we can use the corporate network infrastructure to manage access to internet services or use theEgressFirewallobject as well.

Managing outgoing network traffic on RedHat Openshift v4 using OVNKubernetes CNI

When running workload on Redhat Openshift (OCP) by default all network traffic is allowed. From the security perspective this is a challenge as any application (or intruder) could access other pods and applications with sensitive data or even use the cluster to gain access to the customers intranet.

To manage and limit the allowed network traffic, OCP (as a Kubernetes implementation) provides the NetworkPolicy API. Using the NetworkPolicy API it is possible to secure and limit the network traffic for the workload fine grained and therefore increase the security of the deployment. However there is one drawback using the NetworkPolicy API namely that it can use IP addresses in CIDR format only to configure access to networks / hosts outside of the platform. Unfortunately the NetworkPolicy API does not allow using DNS names to manage access.

While this is at least manageable for intranet access (although it is error prune) it becomes a real issue to control access to internet services which might change IPs without notifications.

Luckily Redhat Openshift provides a way to better manage the access to external services, which allows to use IP addresses in CIDR format as well as DNS names by using the EgressFirewall API.

Note: Redhat Openshift provides currently two network plugins namely the OVN-Kubernetes network plugin and the Openshift SDN network plugin. This article is written based on the OVN-Kubernetes network plugin.

Furthermore in many companies access to external services is anyhow managed via outgoing firewall and/or proxy rules so that it makes sense to make use of the existing rule setup in the existing infrastructure. To ease that approach Redhat Openshift provides the ability to configure an EgressIP for a certain workload based on namespaceSelector or a podSelector. Using the EgressIP all outgoing traffic of a certain workload appears to originate from one configured IP which is helpful if you want to manage access to external networks for example on a corporate firewall.

The test case

In the following steps we’ll setup a test scenario where we use NetworkPolicy API and the EgressFirewall API to protect our environment while we are making use of the existing enterprise infrastructure to manage access to internet services. I.e. we use the NetworkPolicy API to control access within the OCP cluster, we utilize EgressFirewall API to manage the access to the intranet and leave it to the corporate network to manage the access to internet services.

To test the setup we are running our workload in three namespaces on the OCP, namely client, noaccess and server. In the server and noaccess namespace we are running an HTTP service, which is accessed by the client . Furthermore the client is accessing an intranet HTTP server in the 135.0.0.0/8 network as well as an internet service like for example redhat.com.

Setting up the test case

The test case requires to setup three namespaces on OCP with some basic applications deployed and to setup an HTTP server on the intranet.

Setup OCP namespaces

Lets first create the OCP namespaces for the client and server workload and add a label with the required annotation to enable NetworkPolicy audit logging:

|

|

Annotate the worker nodes

To enable the EgressIP we need to annotate at least one node with the label k8s.ovn.org/egress-assignable="". We’ve labeled all the worker nodes by running:

|

|

Deploy NGINX for testing to the server and noaccess namespace

To simulate server workload on the OCP we are simply deploying an nginx server in the server and noaccess namespace which will be accessed via curl from the client namespace:

|

|

and verify that the nginx application started up correctly and is in ready state by running:

|

|

Deploy rhel-tools pod to test access to services

To test access to the resources on the OCP platform and to internet as well as intranet services we deploy the rhel-tools pod to the client namespace.

|

|

and verify that the pod is started properly and in ready state by running:

|

|

Once the pod is ready we are good to test the access to the nginx server pod. So first we start a bash to the client pod:

|

|

and then use curl to access the nginx server in the server namespace:

|

|

so all good and we are able to access the nginx server running in the server namespace.

Note: Repeat the above test with the nginx server running in the noaccess namespace by using the URL http://web.noaccess

Setup the HTTP server on the intranet

To verify that the correct EgressIP is used we are setting up an Apache HTTP Server on an intranet server and log the client IP in the Apache’s access log file by using the combined log-format (which was the default in our case).

Install Apache HTTP server on intranet server

To start an HTTP server we just install the respective HTTPD package using yum:

|

|

Verify the HTTP server configuration

In our case we’ve had to reconfigure the HTTP server to listen on a non-default port as the default HTTP server port 80 has been in use.

|

|

This also required to open the firewalld to allow server access via port 5822 for the network being used to access the HTTP server from the OCP workload:

|

|

Setup a simple HTTP server index.html

To verify that we hit the correct HTTP server we setup the following index.html file:

|

|

and then start the the HTTP server simply by running:

|

|

Verify that the HTTP server is running by checking that the port 5822 is in LISTEN state:

|

|

Now we should be good to test the access to the HTTP server from our client pod:

|

|

Checking the HTTP access log of the server we can see that the source IP is logged as the pod’s node IP running the pod:

|

|

Checking the node hosting the pod and the node’s IP confirms what we expect:

|

|

Setting up the EgressIP for the client namespace to be able to manage outgoing traffic

Before setting up an EgressIP we need to make sure that at least one node is labeled with the label k8s.ovn.org/egress-assignable: "".

Before assigning an EgressIP to a namespace and/or pods we need to make sure that the IP is not in use and that it is from the node’s IP network. The author recommends to reserve a separate range of IP addresses for the EgressIP addresses and omit that range from the DHCP pool.

YAML to create EgressIP address

|

|

Verify that the EgressIP has been created and assigned correctly (don’t worry that the EgressIP is on a different node as the pod - OCP will manage that for you):

|

|

As the EgressIP has been assigned correctly we should now see that the 192.168.50.100 is logged in the HTTP servers access log of we access the server from our client again:

|

|

and checking the HTTP server access log we now confirms that the EgressIP is used for the outgoing traffic:

|

|

So this proves that the EgressIP is indeed used to access the server. This setup can therefore be used to use the corporate network infrastructure to manage access to the internet services from our workload running on OCP as we know the originator IP address for the requests from a specific workload.

Using NetworkPolicy rules to limit access to other services

Using the NetworkPolicy API we can manage the access to services within the current namespace, to other namespaces and to certain IP addresses. The downside however is as mentioned above that we can only use IP addresses in the CIDR format to configure allowed target IPs.

Therefore as mentioned above we will split the config up in the following areas:

- To manage access to services withing the OCP cluster we use

NetworkPolicyrules - To manage access to the intranet services (which are outside OCP) we use the

EgressFirewallconfigurations in combination withNetworkPolicyrules - To manage access to the internet services we setup

EgressIPso that we can use the corporate network infrastructure to manage access to internet services. If we don’t have an existing network infrastructure which allows to manage outgoing traffic we can useEgressFirewallconfigurations as well.

To setup this strategy we start with the following NetworkPolicy object:

|

|

Note: We need to add the rule allow-to-openshift-dns to be able to access sites via names and not IPs only.

From this NetworkPolicy object we allow all traffic at the NetworkPolicy level except:

- OCP internal networks (oc get networks.config.openshift.io cluster -o yaml):

- clusterNetwork (10.128.0.0/14)

- serviceNetwork (172.30.0.0/16)

Note: We need to list the

clusterNetworkas well as theserviceNetworkin the except-list, as otherwise all internal OCP traffic would be allowed andNetworkPolicylimitations would not apply.

This allows us to manage all traffic outside the OCP cluster either:

- at the corporate network level with the help of the

EgressIP - or manage this traffic using an

EgressFirewallobject. We need to consider that different OCP workload might need access to different services on the intranet / internet.

As soon as we apply this NetworkPolicy we’ll see that none of the curls we previously ran successfully works anymore which is what we expect.

Run some tests now from the running pod in the client namespace like for example:

curl http://web.noaccesscurl http://web.server

and all these curl commands should hang at this stage of the configuration.

However the following URLs should work:

curl -I -L https://redhat.comcurl http://135.xxx.xxx.xxx:5822/

as this are intranet and internet URL which is allowed as the IP address is not in the except rule and hence allowed. As we don’t have any corporate setup to block this access it succeeds.

Allow access to the workload in the server namespace

To allow access to the OCP internal services we need to setup the usual NetworkPolicy rule to allow that access. Now let’s create this rule:

|

|

Once we created this rule the following URL should work again: curl http://web.server however the following curl curl http://web.noaccess should still hang.

Control the access to intranet and / or internet services

To manage the access to the intranet and / or internet (in case we don’t have a corporate firewall managing the access to the internet) URLs - we need to setup an EgressFirewall rule (which must be named default). Setting up an EgressFirewall rule allows us to allow or deny access to specific IPs or DNSNames. Right, using an EgressFirewall allows us to specify a cidrSelector or a dnsName with either an Allow or Deny specification.

So to allow access to http://135.xxx.xxx.xxx:5822/ we need to create an EgressFirewall rule allowing that access like the following (we’ll discuss the the other rules in more detail below):

|

|

Once you’ve created this EgressFirewall named default like above you should be able to access curl http://135.xxx.xxx.xxx:5822 again.

What if we have the network 135.xxx.xxx.xxx/8 in the except list of the NetworkPolicy manifest?

In case the NetworkPolicy named allow-all-except-internal-and-intranet has the network 135.xxx.xxx.xxx/8 in its except list - for whatever reasons - we need to allow the access to this network via a NetworkPolicy definition as well.

Only in case you have the network 135.xxx.xxx.xxx/8 in the except list of the NetworkPolicy manifest named allow-all-except-internal-and-intranet we need to add a NetworkPolicy to allow access to this IP or network. Let’s create the following rule.

|

|

Note: This discussion shows us that the NetworkPolicy definitions have priority over the EgressFirewall. I.e. what is not allowed at the NetworkPolicy can’t be allowed at the EgressFirewall level.

As soon as we create the NetworkPolicy allow-to-ip-135.xxx.xxx.xxx we can again access the site at curl http://135.xxx.xxx.xxx:5822 as access is allowed at the NetworkPolicy and the EgressFirewall level.

Some additional notes on the EgressFirewall/default

In this EgressFirewall rule named default we have some more additional rules we should comment on:

EgressFirewall rule - Deny all

The rule list entry:

|

|

denies access to all servers which are not explicitly allowed by another list entry. As we don’t have the site redhat.com in the allow list access to https://redhat.com does not work anymore.

Remember - we could access https://redhat.com before we created the EgressFirewall/default rule.

This relevant if we don’t have an external network infrastructure to manage access to external services and we need to do that from the OCP environment.

EgressFirewall rule for OCP workload

The following list entry in the egress list:

|

|

is useless and does not make sense as this addresses an OCP internal service which is not accessed via the Egress. Hence it should be removed. This rule was added for demonstration purposes only.

EgressFirewall rule limiting allowed ports

The following rule grants access to the host 2innovate.at but only via the ports 80 and 443:

|

|

EgressFirewall rule to an internet host without limiting allowed ports

The following rule allows access to the host www.orf.at without any limitations regarding the ports being accessed:

|

|

Summary

In this Blog post we’ve discussed a possible setup to secure network access on the Redhat Openshift platform using NetworkPolicy, EgressFirewall and EgressIP objects. We have shown that we need NetworkPolicy objects to manage access to services within the OCP platform.

To manage access to the internet or intranet services we can use EgressFirewall objects or rely on existing corporate network infrastructure. So our network protection looks like as follows: